前提准备

1、提前装好docker docker-compose相关命令

2、替换docker仓库地址国内镜像源

cd /etc/docker vi daemon.json {"registry-mirrors": [ "https://docker.1panel.dev", "https://docker.fxxk.dedyn.io", "https://docker.xn--6oq72ry9d5zx.cn", "https://docker.m.daocloud.io", "https://a.ussh.net", "https://docker.zhai.cm"]} 参考地址:https://blog.csdn.net/llc580231/article/details/139979603

3、写一个docker-compose.yml(

新建一个网络、可通过容器名访问网络)

version: '2.1' services: networks: elastic_net: driver: bridge 4、提前下载好相关镜像

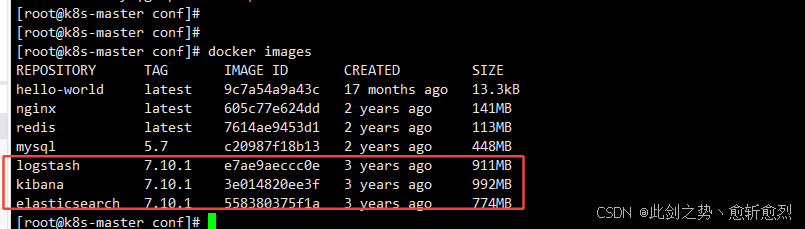

docker pull elasticsearch:7.10.1 docker pull kibana:7.10.1 docker pull logstash:7.10.1 docker images

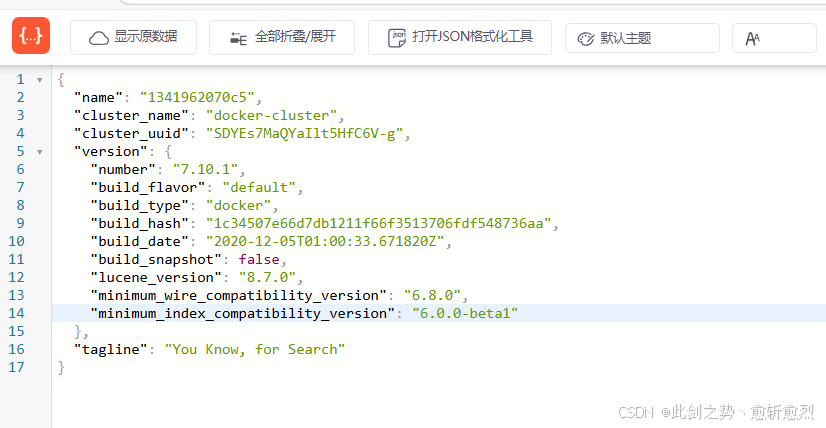

1、elasticsearch

vim /etc/sysctl.conf vm.max_map_count=262144 sysctl -p docker-compose.yml新增es相关配置

es: image: elasticsearch:7.10.1 container_name: es environment: - "discovery.type=single-node" - "TZ=Asia/Shanghai" - "ES_JAVA_OPTS=-Xms512m -Xmx512m" ulimits: memlock: soft: -1 hard: -1 volumes: - /home/es:/usr/share/elasticsearch/data ports: - 9200:9200 networks: - elastic_net 执行新建容器命令./docker-compose -f conf/docker-compose.yml up --build -d es

访问9200端口成功

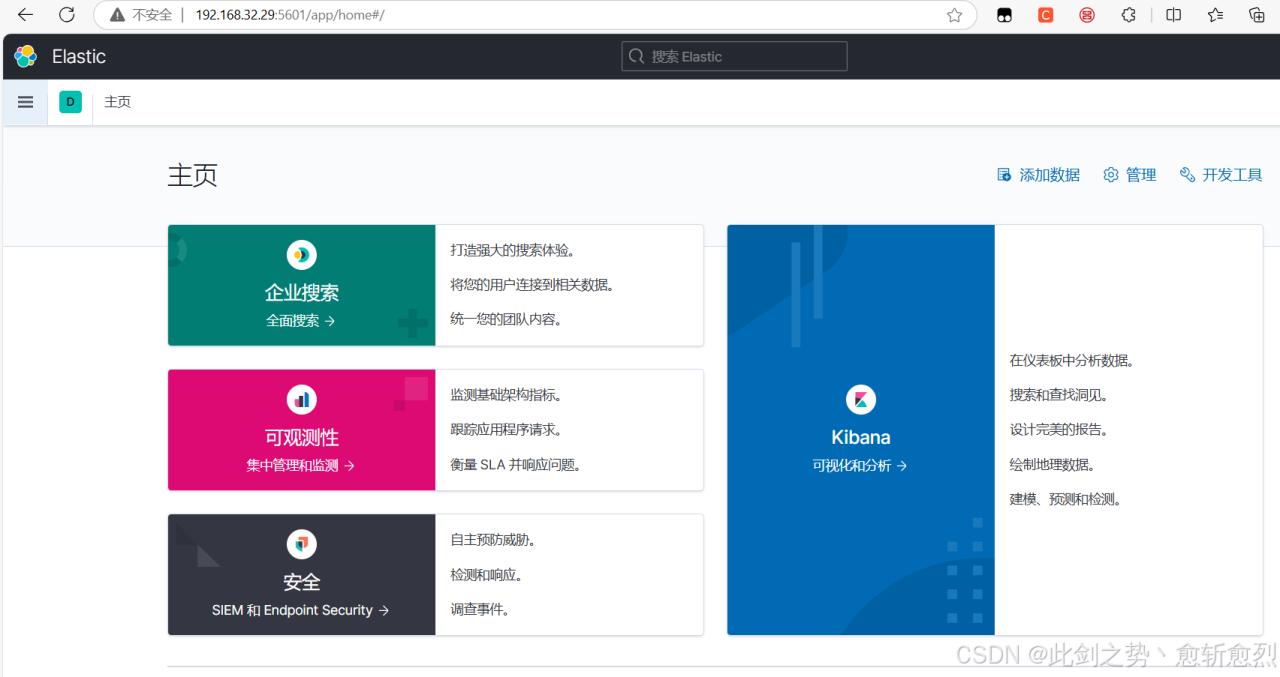

2、kibana

docker-compose.yml新增kibana相关配置

kibana: container_name: kibana image: kibana:7.10.1 restart: unless-stopped environment: - "TZ=Asia/Shanghai" - "I18N_LOCALE=zh-CN" - "ELASTICSEARCH_HOSTS=http://es:9200" ports: - 5601:5601 volumes: - /home/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml networks: - elastic_net 新建文件/home/kibana/config/kibana.yml

server.name: kibana server.host: "0.0.0.0" elasticsearch.hosts: [ "http://es:9200" ] elasticsearch.username: "elastic" elasticsearch.password: "\"123456\"" xpack.monitoring.ui.container.elasticsearch.enabled: true i18n.locale: "zh-CN" 执行新建容器命令./docker-compose -f conf/docker-compose.yml up --build -d kibana

访问5601成功

3、logstash

docker-compose.yml新增logstash相关配置

logstash: container_name: logstash image: logstash:7.10.1 ports: - 5044:5044 volumes: - /home/logstash/pipeline:/usr/share/logstash/pipeline - /home/logstash/config:/usr/share/logstash/config - /home/nginx/log:/home/nginx/log networks: - elastic_net 新建/home/logstash/config和/home/logstash/pipeline文件夹

/home/logstash/config文件新建配置文件pipelines.yml、logstash.yml

- pipeline.id: nginx path.config: "/usr/share/logstash/pipeline/nginx_log.conf" http.host: "0.0.0.0" xpack.monitoring.elasticsearch.hosts: [ "http://es:9200" ] /home/logstash/pipeline文件新建 nginx_log.conf 文件

input { file { type => "messages_log" path => "/home/nginx/log/error.log" } file { type => "secure_log" path => "/home/nginx/log/access.log" } } output { if [type] == "messages_log" { elasticsearch { hosts => ["http://es:9200"] index => "messages_log_%{+YYYY-MM-dd}" user => "elastic" password => "123456" } } if [type] == "secure_log" { elasticsearch { hosts => ["http://es:9200"] index => "secure_log_%{+YYYY-MM-dd}" user => "elastic" password => "123456" } } } 执行新建容器命令./docker-compose -f conf/docker-compose.yml up --build -d logstash

可在kibana上查看es有相关索引

部署起来不是很难、但是没有实际应用logstash的功能、重点在/logstash/pipeline下的管道配置文件*.conf 输入输出过滤等操作、要写好相关的处理逻辑才能将错乱的日志处理成标准日志、至于conf文件的编写感觉很难需要学习相关的语法。

参考官网https://www.elastic.co/guide/en/logstash/current/config-examples.html

原文链接:https://blog.csdn.net/qq_41980255/article/details/142980745?ops_request_misc=%257B%2522request%255Fid%2522%253A%25225d1927a47ec5804af686cfd9bd637727%2522%252C%2522scm%2522%253A%252220140713.130102334.pc%255Fblog.%2522%257D&request_id=5d1927a47ec5804af686cfd9bd637727&biz_id=0&utm_medium=distribute.pc_search_result.none-task-blog-2~blog~first_rank_ecpm_v1~times_rank-20-142980745-null-null.nonecase&utm_term=%E7%BE%A4%E6%99%96%E9%83%A8%E7%BD%B2